Introducing OnnxSharp and 'dotnet onnx'

I’m happy to announce a tiny

open source project of mine for parsing and

manipulating ONNX files in C# called

OnnxSharp and an

accompanying .NET tool called dotnet-onnx (initial version 0.2.0).

| What | Links and Status |

|---|---|

OnnxSharp |

|

dotnet-onnx |

|

Most of OnnxSharp

is simply the C# code generated by running the protobuf tool:

1

./protoc.exe ./onnx.proto3 --csharp_out=OnnxSharp

And hence is bound by how this tool generates source code from the ONNX protobuf schema defined in onnx.proto3 incl. naming etc. Therefore, I will not cover this here. Instead, let’s jump right into a quick guide to the library and tool and some of the actual features I have added on top of the generated source code.

Quick Guide

Install latest version of .NET:

- PowerShell (Windows): https://dot.net/v1/dotnet-install.ps1

- Bash (Linux/macOS): https://dot.net/v1/dotnet-install.sh

Code

| What | How |

|---|---|

| Install | dotnet add PROJECT.csproj package OnnxSharp |

| Parse | var model = ModelProto.Parser.ParseFromFile("mnist-8.onnx"); |

| Info | var info = model.Graph.Info(); |

| Clean | model.Graph.Clean(); |

| SetDim | model.Graph.SetDim(); |

| Write | model.WriteToFile("mnist-8-clean-dynamic.onnx"); |

Tool

| What | How |

|---|---|

| Install | dotnet tool install dotnet-onnx -g |

| Help | dotnet onnx -h |

| Info | dotnet onnx info mnist-8.onnx |

| Clean | dotnet onnx clean mnist-8.onnx mnist-8-clean.onnx |

| SetDim | dotnet onnx setdim mnist-8.onnx mnist-8-setdim.onnx |

Running dotnet onnx -h will show the available commands:

1

2

3

4

5

6

7

8

9

10

11

12

13

Inspect and manipulate ONNX files. Copyright nietras 2021.

Usage: dotnet onnx [command] [options]

Options:

-?|-h|--help Show help information.

Commands:

clean Clean model for inference e.g. remove initializers from inputs

info Print information about a model e.g. inputs and outputs

setdim Set dimension of reshapes, inputs and outputs of model e.g. set new dynamic or fixed batch size.

Run 'dotnet onnx [command] -?|-h|--help' for more information about a command.

But what do these do? Let’s look at a simple example.

Example mnist-8.onnx

You can find example ONNX files and models at https://github.com/onnx/models

also called the ONNX Model Zoo. Let’s download the

mnist-8.onnx

model that can be used for predicting handwritten digits.

The 8 here refers to the OpSet version for that ONNX model.

I won’t cover details of the ONNX format here, but instead refer to

the documents on GitHub

and specifically Open Neural Network Exchange - ONNX.

Assuming you have .NET installed you can then install the

dotnet-onnx tool by running:

1

dotnet tool install dotnet-onnx -g

To get basic information about the model you can then run:

1

dotnet onnx info mnist-8.onnx

This will print Markdown formatted information about the model in tables like:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

# mnist-8.onnx

## Inputs without Initializer

### Tensors

|Name |Type |ElemType|Shape |SizeInFile|

|:-----|:---------|:-------|--------:|---------:|

|Input3|TensorType|Float |1x1x28x28| 32|

## Outputs

### Tensors

|Name |Type |ElemType|Shape|SizeInFile|

|:---------------|:---------|:-------|----:|---------:|

|Plus214_Output_0|TensorType|Float | 1x10| 34|

## Inputs with Initializer

### Tensors

|Name |Type |ElemType|Shape |SizeInFile|

|:---------------------------------|:---------|:-------|--------:|---------:|

|Parameter5 |TensorType|Float | 8x1x5x5| 36|

|Parameter6 |TensorType|Float | 8x1x1| 32|

|Parameter87 |TensorType|Float | 16x8x5x5| 37|

|Parameter88 |TensorType|Float | 16x1x1| 33|

|Pooling160_Output_0_reshape0_shape|TensorType|Int64 | 2| 48|

|Parameter193 |TensorType|Float |16x4x4x10| 38|

|Parameter193_reshape1_shape |TensorType|Int64 | 2| 41|

|Parameter194 |TensorType|Float | 1x10| 30|

## Initializers (Parameters etc.)

|Name |DataType|Dims |π(Dims)|[v0,v1..vN] | (Min,Mean,Max) |SizeInFile|

|:---------------------------------|:-------|--------:|------:|-----------------------------------:|---------:|

|Parameter193 |Float |16x4x4x10| 2560|(-7.595E-001,-1.779E-003,1.186E+000)| 10265|

|Parameter87 |Float | 16x8x5x5| 3200|(-5.089E-001,-3.028E-002,5.647E-001)| 12824|

|Parameter5 |Float | 8x1x5x5| 200|(-9.727E-001,-7.360E-003,1.019E+000)| 823|

|Parameter6 |Float | 8x1x1| 8|(-4.338E-001,-1.023E-001,9.164E-002)| 53|

|Parameter88 |Float | 16x1x1| 16|(-4.147E-001,-1.554E-001,1.328E-002)| 86|

|Pooling160_Output_0_reshape0_shape|Int64 | 2| 2| [1,256]| 46|

|Parameter193_reshape1_shape |Int64 | 2| 2| [256,10]| 39|

|Parameter194 |Float | 1x10| 10|(-1.264E-001,-4.777E-006,1.402E-001)| 62|

## Value Infos

### Tensors

|Name |Type |ElemType|Shape |SizeInFile|

|:---------------------------|:---------|:-------|---------:|---------:|

|Parameter193_reshape1 |TensorType|Float | 256x10| 40|

|Convolution28_Output_0 |TensorType|Float | 1x8x28x28| 48|

|Plus30_Output_0 |TensorType|Float | 1x8x28x28| 41|

|ReLU32_Output_0 |TensorType|Float | 1x8x28x28| 41|

|Pooling66_Output_0 |TensorType|Float | 1x8x14x14| 44|

|Convolution110_Output_0 |TensorType|Float |1x16x14x14| 49|

|Plus112_Output_0 |TensorType|Float |1x16x14x14| 42|

|ReLU114_Output_0 |TensorType|Float |1x16x14x14| 42|

|Pooling160_Output_0 |TensorType|Float | 1x16x4x4| 45|

|Pooling160_Output_0_reshape0|TensorType|Float | 1x256| 47|

|Times212_Output_0 |TensorType|Float | 1x10| 35|

This same info can be printed in C# via:

1

2

3

4

5

using Onnx;

var model = ModelProto.Parser.ParseFromFile(@"mnist-8.onnx");

var graph = model.Graph;

var info = graph.Info();

System.Console.WriteLine(info);

Of particular interest in the above is that the info shows the model has a set of Inputs with initializer. One thing to know here is that ONNX files can have both actual inputs and parameters listed as inputs. The only way to differentiate is to look at whether a given input has an initializer or not. If it does it is typically parameters of the model. E.g. weights for a convolution.

That is, if you in C# look at the graph.Input property (continuing from above):

1

2

3

4

foreach (var input in graph.Input)

{

System.Console.WriteLine(input.Name);

}

then this will print:

1

2

3

4

5

6

7

8

9

Input3

Parameter5

Parameter6

Parameter87

Parameter88

Pooling160_Output_0_reshape0_shape

Parameter193

Parameter193_reshape1_shape

Parameter194

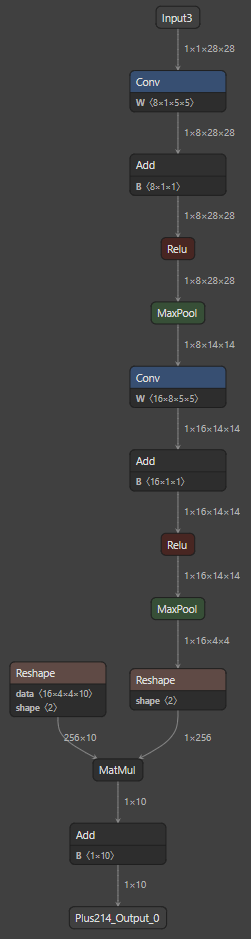

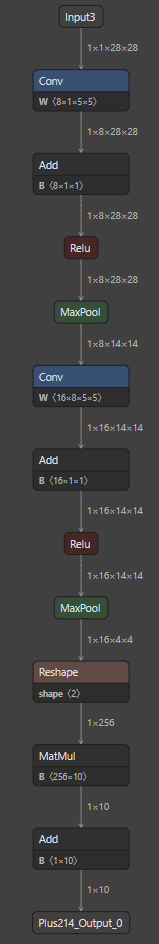

You can compare this to how this model looks in the excellent Netron tool.

Now having parameters listed as inputs can be a problem when you are using the model for inference only. In that case having the parameters listed as inputs can prevent an inference library (like ONNX Runtime or TensorRT) from graph optimizations like constant folding which in Graph Optimization Levels is described as:

Constant Folding: Statically computes parts of the graph that rely only on constant initializers. This eliminates the need to compute them during runtime.

TensorRT would complain about this issue in earlier version but does not in version 7.2, so be aware that this may not be an issue depending on what library you use for inference.

In any case, this is easy to fix with OnnxSharp/dotnet-onnx!

Simply run the clean command like:

1

dotnet onnx clean mnist-8.onnx mnist-8-clean.onnx

which will print:

1

2

Parsed input file 'mnist-8.onnx' of size 26394

Wrote output file 'mnist-8-clean.onnx' of size 25936

Printing the info for the new mnist-8-clean.onnx will

then show the below. Notice how there no longer are any

inputs with initializer.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

# mnist-8-clean.onnx

## Inputs without Initializer

### Tensors

|Name |Type |ElemType|Shape |SizeInFile|

|:-----|:---------|:-------|--------:|---------:|

|Input3|TensorType|Float |1x1x28x28| 32|

## Outputs

### Tensors

|Name |Type |ElemType|Shape|SizeInFile|

|:---------------|:---------|:-------|----:|---------:|

|Plus214_Output_0|TensorType|Float | 1x10| 34|

## Inputs with Initializer

## Initializers (Parameters etc.)

|Name |DataType|Dims |π(Dims)|[v0,v1..vN] | (Min,Mean,Max) |SizeInFile|

|:---------------------------------|:-------|-------:|------:|-----------------------------------:|---------:|

|Parameter193 |Float | 256x10| 2560|(-7.595E-001,-1.779E-003,1.186E+000)| 10264|

|Parameter87 |Float |16x8x5x5| 3200|(-5.089E-001,-3.028E-002,5.647E-001)| 12824|

|Parameter5 |Float | 8x1x5x5| 200|(-9.727E-001,-7.360E-003,1.019E+000)| 823|

|Parameter6 |Float | 8x1x1| 8|(-4.338E-001,-1.023E-001,9.164E-002)| 53|

|Parameter88 |Float | 16x1x1| 16|(-4.147E-001,-1.554E-001,1.328E-002)| 86|

|Pooling160_Output_0_reshape0_shape|Int64 | 2| 2| [1,256]| 46|

|Parameter194 |Float | 1x10| 10|(-1.264E-001,-4.777E-006,1.402E-001)| 62|

## Value Infos

### Tensors

|Name |Type |ElemType|Shape |SizeInFile|

|:---------------------------|:---------|:-------|---------:|---------:|

|Parameter193_reshape1 |TensorType|Float | 256x10| 40|

|Convolution28_Output_0 |TensorType|Float | 1x8x28x28| 48|

|Plus30_Output_0 |TensorType|Float | 1x8x28x28| 41|

|ReLU32_Output_0 |TensorType|Float | 1x8x28x28| 41|

|Pooling66_Output_0 |TensorType|Float | 1x8x14x14| 44|

|Convolution110_Output_0 |TensorType|Float |1x16x14x14| 49|

|Plus112_Output_0 |TensorType|Float |1x16x14x14| 42|

|ReLU114_Output_0 |TensorType|Float |1x16x14x14| 42|

|Pooling160_Output_0 |TensorType|Float | 1x16x4x4| 45|

|Pooling160_Output_0_reshape0|TensorType|Float | 1x256| 47|

|Times212_Output_0 |TensorType|Float | 1x10| 35|

Attentive readers will notice another thing. The initializer

Parameter193_reshape1_shape has disappeared too and the

initializer Parameter193 has changed Dims or shape from

16x4x4x10 to 256x10 also. This is due to the clean command

currently doing two things, which can also be seen in the C# code

for that command:

1

2

3

4

5

6

7

8

9

public static partial class GraphExtensions

{

/// <summary>Clean graph for inference.</summary>

public static void Clean(this GraphProto graph)

{

graph.RemoveInitializersFromInputs();

graph.RemoveUnnecessaryInitializerReshapes();

}

}

As can be seen this will both Remove Initializers From Inputs and

Remove Unnecessary Initializer Reshapes with the latter being

the cause of the reshape disappearing. Not that the reshape

is a problem as such, but it does clean up the graph if you look at

it in Netron.

That’s it for the announcement. I will cover the setdim command

in another blog post and how this can used to change models to

have dynamic batch size instead of for example the fixed 1 in

the example mnist-8.onnx covered here.