Export and Quantize Models like Llama-3-8B-Instruct with Model Builder for ONNX Runtime GenAI

Previously in Phi-3-mini in 30 lines of C# with ONNX Runtime GenAI and Phi-3-vision in 50 lines of C# with ONNX Runtime GenAI I showed how easy it was to run small Phi-3 models locally in just a few lines of C#. In this blog post I show how you can export another model, in this case Meta’s Llama-3-8B-Instruct, with Model Builder for ONNX Runtime GenAI.

This basically follows the guide Generate models using Model Builder that details how to use the Python script builder.py to export models to ONNX format.

Ensure Python is installed:

1

2

python --version

Python 3.12.0

Create a directory for the work and a Python environment with necessary packages installed (this is without versions 🤷):

1

2

3

4

5

mkdir onnxruntimegenai-model-build

python -m venv env

.\env\Scripts\activate.bat

pip install torch transformers onnx onnxruntime

pip install --pre onnxruntime-genai

Try running the builder for help info:

1

2

3

4

5

python -m onnxruntime_genai.models.builder --help

usage: builder.py [-h] [-m MODEL_NAME] [-i INPUT] -o OUTPUT

-p {int4,fp16,fp32} -e {cpu,cuda,dml,web} [-c CACHE_DIR]

[--extra_options KEY=VALUE [KEY=VALUE ...]]

...

Alright, before continuing you need to be able to access and download models

from HuggingFace 🤗 Here I use huggingface-cli to login.

1

huggingface-cli login

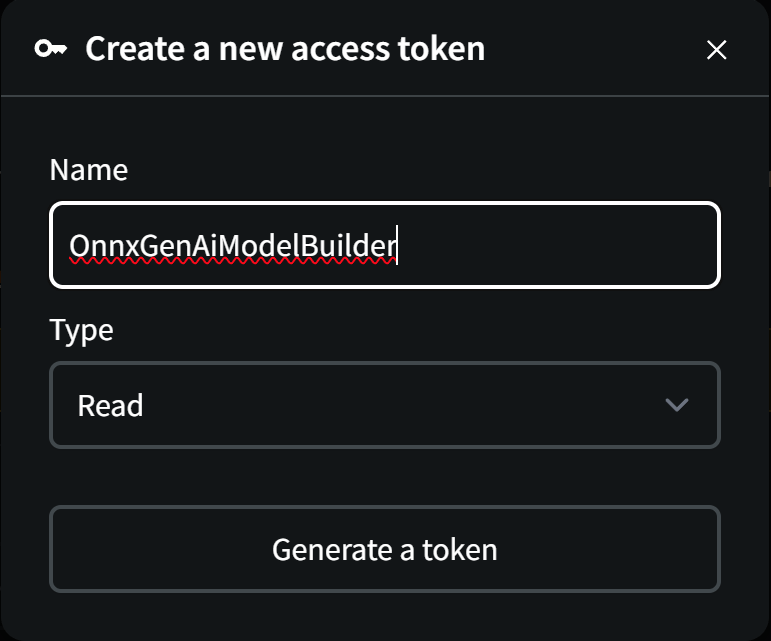

This will ask for token:

1

2

To login, `huggingface_hub` requires a token generated

from https://huggingface.co/settings/tokens .

Which can be created at the link given above with a screenshot shown below:

Paste the token and perhaps add it to git credentials.

1

2

3

4

5

6

7

8

9

Token can be pasted using 'Right-Click'.

Enter your token (input will not be visible):

Add token as git credential? (Y/n) y

Token is valid (permission: read).

Your token has been saved in your configured

git credential helpers (manager).

Your token has been saved to

C:\Users\USERNAME\.cache\huggingface\token

Login successful

Now you can run the builder. Initially I wanted to try out the new Qwen2 SLMs. However, the model builder only works for a predefined list of model architectures and names as detailed at builder.py#L2239-L2264. Instead, I though I’d try the Llama-3-8B-Instruct model from Meta. You have to ask for access to it so I did that.

To export and quantize the model I used the follow command line:

1

python -m onnxruntime_genai.models.builder -m "meta-llama/Meta-Llama-3-8B-Instruct" -o Meta-Llama-3-8B-Instruct-onnx-cuda-int4 -p int4 -e cuda -c cache

Which split into multiple lines looks like:

1

2

3

4

python -m onnxruntime_genai.models.builder

-m "meta-llama/Meta-Llama-3-8B-Instruct"

-o Meta-Llama-3-8B-Instruct-onnx-cuda-int4

-p int4 -e cuda -c cache

This provides the model name (-m), output directory (-o), precision (-p)

and execution provider (-e).

This takes a while to run. For full log details see bottom of this post. The

result is a directory Meta-Llama-3-8B-Instruct-onnx-cuda-int4 with the ONNX

model and quantized weights:

1

2

3

4

5

6

7

8

Length Name

------ ----

1747 genai_config.json

208381 model.onnx

5274673152 model.onnx.data

312 special_tokens_map.json

9085698 tokenizer.json

53039 tokenizer_config.json

Hence, the 8B parameter model (~16 GB data for f16) has been quantized to ~5.3GB in size, so a bit larger than the theoretical 4x smaller going from f16 to int4.

Based on the code in my previous blog post Phi-3-mini in 30 lines of C# with ONNX Runtime GenAI you can now run the model with ONNX Runtime GenAI by simply pointing at that directory. For example:

1

2

3

4

5

6

7

8

9

10

11

dotnet run -- Meta-Llama-3-8B-Instruct-onnx-cuda-int4

Prompt: Who are you?

Processing...

Generating response...

================ Output ================

I'm LLaMA, an AI assistant developed by Meta AI that can understand and

respond to human input in a conversational manner. I'm here to help answer

your questions, provide information, and even have a chat with you.

I'm not a human, but I'm designed to be friendly and helpful, so feel free

to ask me anything!

==========================================

That’s all!

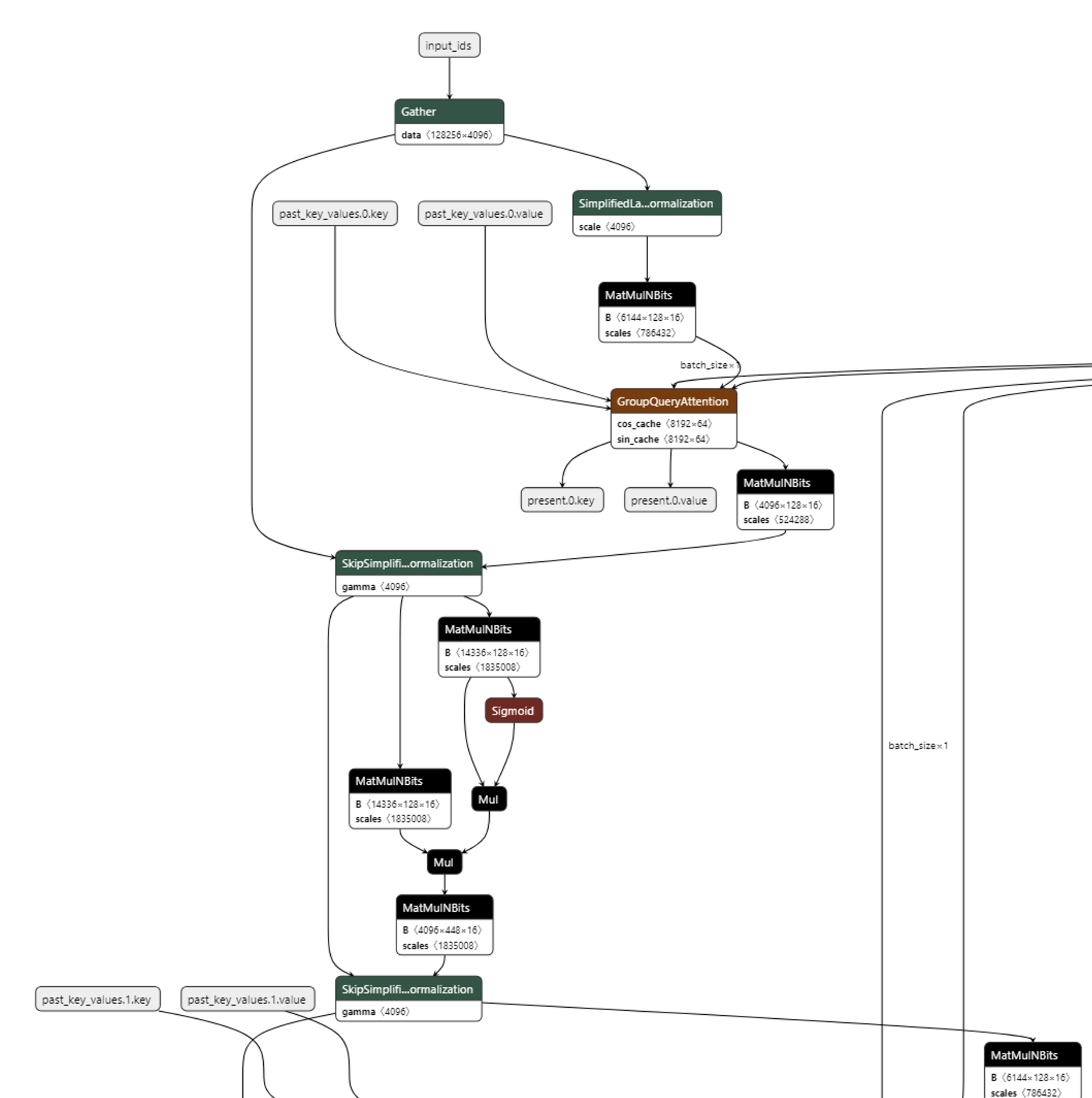

PS: A quick view of network architecture at start of model. Remainder is pretty much same blocks repeated.

PPS: Full log of model builder run:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

308

309

310

311

312

313

314

315

316

317

318

319

320

321

322

323

324

325

326

327

328

329

330

331

332

333

334

335

336

337

338

339

340

341

342

343

344

345

346

347

348

349

350

351

352

353

354

355

356

357

358

359

360

361

362

363

364

365

366

367

368

369

370

371

372

373

374

375

376

377

378

379

380

381

382

383

384

385

386

387

python -m onnxruntime_genai.models.builder -m "meta-llama/Meta-Llama-3-8B-Instruct" -o Meta-Llama-3-8B-Instruct-onnx-cuda-int4 -p int4 -e cuda -c cache

Valid precision + execution provider combinations are: FP32 CPU, FP32 CUDA, FP16 CUDA, FP16 DML, INT4 CPU, INT4 CUDA, INT4 DML

Extra options: {}

C:\Python312\Lib\site-packages\transformers\models\auto\configuration_auto.py:919: FutureWarning: The `use_auth_token` argument is deprecated and will be removed in v5 of Transformers. Please use `token` instead.

warnings.warn(

C:\Python312\Lib\site-packages\huggingface_hub\file_download.py:1132: FutureWarning: `resume_download` is deprecated and will be removed in version 1.0.0. Downloads always resume when possible. If you want to force a new download, use `force_download=True`.

warnings.warn(

config.json: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 654/654 [00:00<?, ?B/s]

GroupQueryAttention (GQA) is used in this model.

C:\Python312\Lib\site-packages\transformers\models\auto\auto_factory.py:468: FutureWarning: The `use_auth_token` argument is deprecated and will be removed in v5 of Transformers. Please use `token` instead.

warnings.warn(

model.safetensors.index.json: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 23.9k/23.9k [00:00<?, ?B/s]

model-00001-of-00004.safetensors: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 4.98G/4.98G [01:03<00:00, 79.0MB/s]

model-00002-of-00004.safetensors: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 5.00G/5.00G [00:59<00:00, 83.6MB/s]

model-00003-of-00004.safetensors: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 4.92G/4.92G [00:58<00:00, 83.6MB/s]

model-00004-of-00004.safetensors: 100%|████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 1.17G/1.17G [00:14<00:00, 78.5MB/s]

Downloading shards: 100%|█████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 4/4 [03:17<00:00, 49.45s/it]

Loading checkpoint shards: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 4/4 [00:21<00:00, 5.47s/it]

generation_config.json: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 187/187 [00:00<?, ?B/s]

Reading embedding layer

Reading decoder layer 0

Reading decoder layer 1

Reading decoder layer 2

Reading decoder layer 3

Reading decoder layer 4

Reading decoder layer 5

Reading decoder layer 6

Reading decoder layer 7

Reading decoder layer 8

Reading decoder layer 9

Reading decoder layer 10

Reading decoder layer 11

Reading decoder layer 12

Reading decoder layer 13

Reading decoder layer 14

Reading decoder layer 15

Reading decoder layer 16

Reading decoder layer 17

Reading decoder layer 18

Reading decoder layer 19

Reading decoder layer 20

Reading decoder layer 21

Reading decoder layer 22

Reading decoder layer 23

Reading decoder layer 24

Reading decoder layer 25

Reading decoder layer 26

Reading decoder layer 27

Reading decoder layer 28

Reading decoder layer 29

Reading decoder layer 30

Reading decoder layer 31

Reading final norm

Reading LM head

Saving ONNX model in Meta-Llama-3-8B-Instruct-onnx-cuda-int4

2024-06-08 14:05:16,409 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.0/attn/qkv_proj/MatMul ...

2024-06-08 14:05:16,578 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.0/attn/qkv_proj/MatMul ...

2024-06-08 14:05:16,585 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.0/attn/o_proj/MatMul ...

2024-06-08 14:05:16,661 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.0/attn/o_proj/MatMul ...

2024-06-08 14:05:16,664 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.0/mlp/gate_proj/MatMul ...

2024-06-08 14:05:16,861 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.0/mlp/gate_proj/MatMul ...

2024-06-08 14:05:16,871 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.0/mlp/up_proj/MatMul ...

2024-06-08 14:05:17,059 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.0/mlp/up_proj/MatMul ...

2024-06-08 14:05:17,069 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.0/mlp/down_proj/MatMul ...

2024-06-08 14:05:17,264 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.0/mlp/down_proj/MatMul ...

2024-06-08 14:05:17,275 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.1/attn/qkv_proj/MatMul ...

2024-06-08 14:05:17,380 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.1/attn/qkv_proj/MatMul ...

2024-06-08 14:05:17,385 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.1/attn/o_proj/MatMul ...

2024-06-08 14:05:17,447 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.1/attn/o_proj/MatMul ...

2024-06-08 14:05:17,451 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.1/mlp/gate_proj/MatMul ...

2024-06-08 14:05:17,656 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.1/mlp/gate_proj/MatMul ...

2024-06-08 14:05:17,669 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.1/mlp/up_proj/MatMul ...

2024-06-08 14:05:17,861 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.1/mlp/up_proj/MatMul ...

2024-06-08 14:05:17,872 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.1/mlp/down_proj/MatMul ...

2024-06-08 14:05:18,053 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.1/mlp/down_proj/MatMul ...

2024-06-08 14:05:18,063 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.2/attn/qkv_proj/MatMul ...

2024-06-08 14:05:18,146 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.2/attn/qkv_proj/MatMul ...

2024-06-08 14:05:18,152 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.2/attn/o_proj/MatMul ...

2024-06-08 14:05:18,207 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.2/attn/o_proj/MatMul ...

2024-06-08 14:05:18,211 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.2/mlp/gate_proj/MatMul ...

2024-06-08 14:05:18,396 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.2/mlp/gate_proj/MatMul ...

2024-06-08 14:05:18,406 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.2/mlp/up_proj/MatMul ...

2024-06-08 14:05:18,597 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.2/mlp/up_proj/MatMul ...

2024-06-08 14:05:18,610 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.2/mlp/down_proj/MatMul ...

2024-06-08 14:05:18,784 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.2/mlp/down_proj/MatMul ...

2024-06-08 14:05:18,793 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.3/attn/qkv_proj/MatMul ...

2024-06-08 14:05:18,870 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.3/attn/qkv_proj/MatMul ...

2024-06-08 14:05:18,874 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.3/attn/o_proj/MatMul ...

2024-06-08 14:05:18,928 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.3/attn/o_proj/MatMul ...

2024-06-08 14:05:18,931 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.3/mlp/gate_proj/MatMul ...

2024-06-08 14:05:19,103 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.3/mlp/gate_proj/MatMul ...

2024-06-08 14:05:19,113 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.3/mlp/up_proj/MatMul ...

2024-06-08 14:05:19,296 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.3/mlp/up_proj/MatMul ...

2024-06-08 14:05:19,306 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.3/mlp/down_proj/MatMul ...

2024-06-08 14:05:19,501 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.3/mlp/down_proj/MatMul ...

2024-06-08 14:05:19,510 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.4/attn/qkv_proj/MatMul ...

2024-06-08 14:05:19,594 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.4/attn/qkv_proj/MatMul ...

2024-06-08 14:05:19,598 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.4/attn/o_proj/MatMul ...

2024-06-08 14:05:19,654 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.4/attn/o_proj/MatMul ...

2024-06-08 14:05:19,657 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.4/mlp/gate_proj/MatMul ...

2024-06-08 14:05:19,835 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.4/mlp/gate_proj/MatMul ...

2024-06-08 14:05:19,844 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.4/mlp/up_proj/MatMul ...

2024-06-08 14:05:20,020 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.4/mlp/up_proj/MatMul ...

2024-06-08 14:05:20,030 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.4/mlp/down_proj/MatMul ...

2024-06-08 14:05:20,216 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.4/mlp/down_proj/MatMul ...

2024-06-08 14:05:20,225 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.5/attn/qkv_proj/MatMul ...

2024-06-08 14:05:20,309 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.5/attn/qkv_proj/MatMul ...

2024-06-08 14:05:20,314 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.5/attn/o_proj/MatMul ...

2024-06-08 14:05:20,374 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.5/attn/o_proj/MatMul ...

2024-06-08 14:05:20,377 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.5/mlp/gate_proj/MatMul ...

2024-06-08 14:05:20,558 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.5/mlp/gate_proj/MatMul ...

2024-06-08 14:05:20,570 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.5/mlp/up_proj/MatMul ...

2024-06-08 14:05:20,741 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.5/mlp/up_proj/MatMul ...

2024-06-08 14:05:20,751 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.5/mlp/down_proj/MatMul ...

2024-06-08 14:05:20,928 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.5/mlp/down_proj/MatMul ...

2024-06-08 14:05:20,938 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.6/attn/qkv_proj/MatMul ...

2024-06-08 14:05:21,021 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.6/attn/qkv_proj/MatMul ...

2024-06-08 14:05:21,025 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.6/attn/o_proj/MatMul ...

2024-06-08 14:05:21,090 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.6/attn/o_proj/MatMul ...

2024-06-08 14:05:21,093 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.6/mlp/gate_proj/MatMul ...

2024-06-08 14:05:21,288 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.6/mlp/gate_proj/MatMul ...

2024-06-08 14:05:21,298 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.6/mlp/up_proj/MatMul ...

2024-06-08 14:05:21,489 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.6/mlp/up_proj/MatMul ...

2024-06-08 14:05:21,499 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.6/mlp/down_proj/MatMul ...

2024-06-08 14:05:21,688 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.6/mlp/down_proj/MatMul ...

2024-06-08 14:05:21,698 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.7/attn/qkv_proj/MatMul ...

2024-06-08 14:05:21,782 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.7/attn/qkv_proj/MatMul ...

2024-06-08 14:05:21,786 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.7/attn/o_proj/MatMul ...

2024-06-08 14:05:21,852 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.7/attn/o_proj/MatMul ...

2024-06-08 14:05:21,855 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.7/mlp/gate_proj/MatMul ...

2024-06-08 14:05:22,038 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.7/mlp/gate_proj/MatMul ...

2024-06-08 14:05:22,048 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.7/mlp/up_proj/MatMul ...

2024-06-08 14:05:22,239 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.7/mlp/up_proj/MatMul ...

2024-06-08 14:05:22,249 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.7/mlp/down_proj/MatMul ...

2024-06-08 14:05:22,445 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.7/mlp/down_proj/MatMul ...

2024-06-08 14:05:22,455 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.8/attn/qkv_proj/MatMul ...

2024-06-08 14:05:22,538 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.8/attn/qkv_proj/MatMul ...

2024-06-08 14:05:22,543 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.8/attn/o_proj/MatMul ...

2024-06-08 14:05:22,598 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.8/attn/o_proj/MatMul ...

2024-06-08 14:05:22,601 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.8/mlp/gate_proj/MatMul ...

2024-06-08 14:05:22,784 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.8/mlp/gate_proj/MatMul ...

2024-06-08 14:05:22,793 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.8/mlp/up_proj/MatMul ...

2024-06-08 14:05:22,989 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.8/mlp/up_proj/MatMul ...

2024-06-08 14:05:22,998 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.8/mlp/down_proj/MatMul ...

2024-06-08 14:05:23,186 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.8/mlp/down_proj/MatMul ...

2024-06-08 14:05:23,196 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.9/attn/qkv_proj/MatMul ...

2024-06-08 14:05:23,279 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.9/attn/qkv_proj/MatMul ...

2024-06-08 14:05:23,284 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.9/attn/o_proj/MatMul ...

2024-06-08 14:05:23,344 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.9/attn/o_proj/MatMul ...

2024-06-08 14:05:23,347 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.9/mlp/gate_proj/MatMul ...

2024-06-08 14:05:23,549 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.9/mlp/gate_proj/MatMul ...

2024-06-08 14:05:23,560 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.9/mlp/up_proj/MatMul ...

2024-06-08 14:05:23,746 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.9/mlp/up_proj/MatMul ...

2024-06-08 14:05:23,756 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.9/mlp/down_proj/MatMul ...

2024-06-08 14:05:23,950 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.9/mlp/down_proj/MatMul ...

2024-06-08 14:05:23,959 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.10/attn/qkv_proj/MatMul ...

2024-06-08 14:05:24,040 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.10/attn/qkv_proj/MatMul ...

2024-06-08 14:05:24,044 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.10/attn/o_proj/MatMul ...

2024-06-08 14:05:24,105 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.10/attn/o_proj/MatMul ...

2024-06-08 14:05:24,108 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.10/mlp/gate_proj/MatMul ...

2024-06-08 14:05:24,312 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.10/mlp/gate_proj/MatMul ...

2024-06-08 14:05:24,322 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.10/mlp/up_proj/MatMul ...

2024-06-08 14:05:24,510 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.10/mlp/up_proj/MatMul ...

2024-06-08 14:05:24,521 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.10/mlp/down_proj/MatMul ...

2024-06-08 14:05:24,706 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.10/mlp/down_proj/MatMul ...

2024-06-08 14:05:24,716 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.11/attn/qkv_proj/MatMul ...

2024-06-08 14:05:24,802 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.11/attn/qkv_proj/MatMul ...

2024-06-08 14:05:24,806 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.11/attn/o_proj/MatMul ...

2024-06-08 14:05:24,870 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.11/attn/o_proj/MatMul ...

2024-06-08 14:05:24,873 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.11/mlp/gate_proj/MatMul ...

2024-06-08 14:05:25,067 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.11/mlp/gate_proj/MatMul ...

2024-06-08 14:05:25,076 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.11/mlp/up_proj/MatMul ...

2024-06-08 14:05:25,270 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.11/mlp/up_proj/MatMul ...

2024-06-08 14:05:25,279 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.11/mlp/down_proj/MatMul ...

2024-06-08 14:05:25,464 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.11/mlp/down_proj/MatMul ...

2024-06-08 14:05:25,474 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.12/attn/qkv_proj/MatMul ...

2024-06-08 14:05:25,558 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.12/attn/qkv_proj/MatMul ...

2024-06-08 14:05:25,562 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.12/attn/o_proj/MatMul ...

2024-06-08 14:05:25,621 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.12/attn/o_proj/MatMul ...

2024-06-08 14:05:25,624 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.12/mlp/gate_proj/MatMul ...

2024-06-08 14:05:25,806 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.12/mlp/gate_proj/MatMul ...

2024-06-08 14:05:25,815 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.12/mlp/up_proj/MatMul ...

2024-06-08 14:05:26,008 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.12/mlp/up_proj/MatMul ...

2024-06-08 14:05:26,019 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.12/mlp/down_proj/MatMul ...

2024-06-08 14:05:26,202 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.12/mlp/down_proj/MatMul ...

2024-06-08 14:05:26,212 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.13/attn/qkv_proj/MatMul ...

2024-06-08 14:05:26,292 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.13/attn/qkv_proj/MatMul ...

2024-06-08 14:05:26,296 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.13/attn/o_proj/MatMul ...

2024-06-08 14:05:26,356 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.13/attn/o_proj/MatMul ...

2024-06-08 14:05:26,359 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.13/mlp/gate_proj/MatMul ...

2024-06-08 14:05:26,543 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.13/mlp/gate_proj/MatMul ...

2024-06-08 14:05:26,553 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.13/mlp/up_proj/MatMul ...

2024-06-08 14:05:26,736 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.13/mlp/up_proj/MatMul ...

2024-06-08 14:05:26,746 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.13/mlp/down_proj/MatMul ...

2024-06-08 14:05:26,945 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.13/mlp/down_proj/MatMul ...

2024-06-08 14:05:26,955 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.14/attn/qkv_proj/MatMul ...

2024-06-08 14:05:27,042 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.14/attn/qkv_proj/MatMul ...

2024-06-08 14:05:27,046 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.14/attn/o_proj/MatMul ...

2024-06-08 14:05:27,106 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.14/attn/o_proj/MatMul ...

2024-06-08 14:05:27,109 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.14/mlp/gate_proj/MatMul ...

2024-06-08 14:05:27,319 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.14/mlp/gate_proj/MatMul ...

2024-06-08 14:05:27,328 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.14/mlp/up_proj/MatMul ...

2024-06-08 14:05:27,561 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.14/mlp/up_proj/MatMul ...

2024-06-08 14:05:27,568 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.14/mlp/down_proj/MatMul ...

2024-06-08 14:05:27,756 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.14/mlp/down_proj/MatMul ...

2024-06-08 14:05:27,766 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.15/attn/qkv_proj/MatMul ...

2024-06-08 14:05:27,848 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.15/attn/qkv_proj/MatMul ...

2024-06-08 14:05:27,852 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.15/attn/o_proj/MatMul ...

2024-06-08 14:05:27,911 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.15/attn/o_proj/MatMul ...

2024-06-08 14:05:27,914 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.15/mlp/gate_proj/MatMul ...

2024-06-08 14:05:28,124 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.15/mlp/gate_proj/MatMul ...

2024-06-08 14:05:28,137 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.15/mlp/up_proj/MatMul ...

2024-06-08 14:05:28,343 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.15/mlp/up_proj/MatMul ...

2024-06-08 14:05:28,353 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.15/mlp/down_proj/MatMul ...

2024-06-08 14:05:28,565 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.15/mlp/down_proj/MatMul ...

2024-06-08 14:05:28,574 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.16/attn/qkv_proj/MatMul ...

2024-06-08 14:05:28,657 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.16/attn/qkv_proj/MatMul ...

2024-06-08 14:05:28,669 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.16/attn/o_proj/MatMul ...

2024-06-08 14:05:28,729 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.16/attn/o_proj/MatMul ...

2024-06-08 14:05:28,732 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.16/mlp/gate_proj/MatMul ...

2024-06-08 14:05:28,914 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.16/mlp/gate_proj/MatMul ...

2024-06-08 14:05:28,924 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.16/mlp/up_proj/MatMul ...

2024-06-08 14:05:29,118 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.16/mlp/up_proj/MatMul ...

2024-06-08 14:05:29,127 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.16/mlp/down_proj/MatMul ...

2024-06-08 14:05:29,320 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.16/mlp/down_proj/MatMul ...

2024-06-08 14:05:29,329 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.17/attn/qkv_proj/MatMul ...

2024-06-08 14:05:29,414 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.17/attn/qkv_proj/MatMul ...

2024-06-08 14:05:29,418 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.17/attn/o_proj/MatMul ...

2024-06-08 14:05:29,478 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.17/attn/o_proj/MatMul ...

2024-06-08 14:05:29,482 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.17/mlp/gate_proj/MatMul ...

2024-06-08 14:05:29,674 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.17/mlp/gate_proj/MatMul ...

2024-06-08 14:05:29,684 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.17/mlp/up_proj/MatMul ...

2024-06-08 14:05:29,893 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.17/mlp/up_proj/MatMul ...

2024-06-08 14:05:29,902 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.17/mlp/down_proj/MatMul ...

2024-06-08 14:05:30,106 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.17/mlp/down_proj/MatMul ...

2024-06-08 14:05:30,116 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.18/attn/qkv_proj/MatMul ...

2024-06-08 14:05:30,199 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.18/attn/qkv_proj/MatMul ...

2024-06-08 14:05:30,203 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.18/attn/o_proj/MatMul ...

2024-06-08 14:05:30,262 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.18/attn/o_proj/MatMul ...

2024-06-08 14:05:30,265 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.18/mlp/gate_proj/MatMul ...

2024-06-08 14:05:30,530 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.18/mlp/gate_proj/MatMul ...

2024-06-08 14:05:30,539 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.18/mlp/up_proj/MatMul ...

2024-06-08 14:05:30,729 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.18/mlp/up_proj/MatMul ...

2024-06-08 14:05:30,740 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.18/mlp/down_proj/MatMul ...

2024-06-08 14:05:30,930 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.18/mlp/down_proj/MatMul ...

2024-06-08 14:05:30,940 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.19/attn/qkv_proj/MatMul ...

2024-06-08 14:05:31,021 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.19/attn/qkv_proj/MatMul ...

2024-06-08 14:05:31,026 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.19/attn/o_proj/MatMul ...

2024-06-08 14:05:31,095 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.19/attn/o_proj/MatMul ...

2024-06-08 14:05:31,098 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.19/mlp/gate_proj/MatMul ...

2024-06-08 14:05:31,299 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.19/mlp/gate_proj/MatMul ...

2024-06-08 14:05:31,309 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.19/mlp/up_proj/MatMul ...

2024-06-08 14:05:31,503 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.19/mlp/up_proj/MatMul ...

2024-06-08 14:05:31,513 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.19/mlp/down_proj/MatMul ...

2024-06-08 14:05:31,703 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.19/mlp/down_proj/MatMul ...

2024-06-08 14:05:31,712 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.20/attn/qkv_proj/MatMul ...

2024-06-08 14:05:31,791 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.20/attn/qkv_proj/MatMul ...

2024-06-08 14:05:31,795 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.20/attn/o_proj/MatMul ...

2024-06-08 14:05:31,854 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.20/attn/o_proj/MatMul ...

2024-06-08 14:05:31,858 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.20/mlp/gate_proj/MatMul ...

2024-06-08 14:05:32,048 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.20/mlp/gate_proj/MatMul ...

2024-06-08 14:05:32,058 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.20/mlp/up_proj/MatMul ...

2024-06-08 14:05:32,320 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.20/mlp/up_proj/MatMul ...

2024-06-08 14:05:32,330 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.20/mlp/down_proj/MatMul ...

2024-06-08 14:05:32,535 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.20/mlp/down_proj/MatMul ...

2024-06-08 14:05:32,548 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.21/attn/qkv_proj/MatMul ...

2024-06-08 14:05:32,626 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.21/attn/qkv_proj/MatMul ...

2024-06-08 14:05:32,631 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.21/attn/o_proj/MatMul ...

2024-06-08 14:05:32,705 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.21/attn/o_proj/MatMul ...

2024-06-08 14:05:32,708 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.21/mlp/gate_proj/MatMul ...

2024-06-08 14:05:32,911 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.21/mlp/gate_proj/MatMul ...

2024-06-08 14:05:32,920 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.21/mlp/up_proj/MatMul ...

2024-06-08 14:05:33,118 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.21/mlp/up_proj/MatMul ...

2024-06-08 14:05:33,128 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.21/mlp/down_proj/MatMul ...

2024-06-08 14:05:33,316 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.21/mlp/down_proj/MatMul ...

2024-06-08 14:05:33,327 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.22/attn/qkv_proj/MatMul ...

2024-06-08 14:05:33,405 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.22/attn/qkv_proj/MatMul ...

2024-06-08 14:05:33,409 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.22/attn/o_proj/MatMul ...

2024-06-08 14:05:33,469 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.22/attn/o_proj/MatMul ...

2024-06-08 14:05:33,472 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.22/mlp/gate_proj/MatMul ...

2024-06-08 14:05:33,658 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.22/mlp/gate_proj/MatMul ...

2024-06-08 14:05:33,668 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.22/mlp/up_proj/MatMul ...

2024-06-08 14:05:33,853 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.22/mlp/up_proj/MatMul ...

2024-06-08 14:05:33,862 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.22/mlp/down_proj/MatMul ...

2024-06-08 14:05:34,046 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.22/mlp/down_proj/MatMul ...

2024-06-08 14:05:34,056 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.23/attn/qkv_proj/MatMul ...

2024-06-08 14:05:34,139 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.23/attn/qkv_proj/MatMul ...

2024-06-08 14:05:34,143 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.23/attn/o_proj/MatMul ...

2024-06-08 14:05:34,207 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.23/attn/o_proj/MatMul ...

2024-06-08 14:05:34,211 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.23/mlp/gate_proj/MatMul ...

2024-06-08 14:05:34,410 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.23/mlp/gate_proj/MatMul ...

2024-06-08 14:05:34,419 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.23/mlp/up_proj/MatMul ...

2024-06-08 14:05:34,618 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.23/mlp/up_proj/MatMul ...

2024-06-08 14:05:34,629 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.23/mlp/down_proj/MatMul ...

2024-06-08 14:05:34,825 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.23/mlp/down_proj/MatMul ...

2024-06-08 14:05:34,834 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.24/attn/qkv_proj/MatMul ...

2024-06-08 14:05:34,921 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.24/attn/qkv_proj/MatMul ...

2024-06-08 14:05:34,926 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.24/attn/o_proj/MatMul ...

2024-06-08 14:05:34,989 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.24/attn/o_proj/MatMul ...

2024-06-08 14:05:34,992 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.24/mlp/gate_proj/MatMul ...

2024-06-08 14:05:35,205 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.24/mlp/gate_proj/MatMul ...

2024-06-08 14:05:35,214 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.24/mlp/up_proj/MatMul ...

2024-06-08 14:05:35,422 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.24/mlp/up_proj/MatMul ...

2024-06-08 14:05:35,432 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.24/mlp/down_proj/MatMul ...

2024-06-08 14:05:35,640 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.24/mlp/down_proj/MatMul ...

2024-06-08 14:05:35,651 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.25/attn/qkv_proj/MatMul ...

2024-06-08 14:05:35,762 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.25/attn/qkv_proj/MatMul ...

2024-06-08 14:05:35,767 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.25/attn/o_proj/MatMul ...

2024-06-08 14:05:35,827 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.25/attn/o_proj/MatMul ...

2024-06-08 14:05:35,831 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.25/mlp/gate_proj/MatMul ...

2024-06-08 14:05:36,039 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.25/mlp/gate_proj/MatMul ...

2024-06-08 14:05:36,048 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.25/mlp/up_proj/MatMul ...

2024-06-08 14:05:36,238 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.25/mlp/up_proj/MatMul ...

2024-06-08 14:05:36,247 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.25/mlp/down_proj/MatMul ...

2024-06-08 14:05:36,435 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.25/mlp/down_proj/MatMul ...

2024-06-08 14:05:36,444 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.26/attn/qkv_proj/MatMul ...

2024-06-08 14:05:36,529 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.26/attn/qkv_proj/MatMul ...

2024-06-08 14:05:36,533 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.26/attn/o_proj/MatMul ...

2024-06-08 14:05:36,601 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.26/attn/o_proj/MatMul ...

2024-06-08 14:05:36,604 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.26/mlp/gate_proj/MatMul ...

2024-06-08 14:05:36,791 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.26/mlp/gate_proj/MatMul ...

2024-06-08 14:05:36,801 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.26/mlp/up_proj/MatMul ...

2024-06-08 14:05:36,992 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.26/mlp/up_proj/MatMul ...

2024-06-08 14:05:37,002 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.26/mlp/down_proj/MatMul ...

2024-06-08 14:05:37,203 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.26/mlp/down_proj/MatMul ...

2024-06-08 14:05:37,212 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.27/attn/qkv_proj/MatMul ...

2024-06-08 14:05:37,295 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.27/attn/qkv_proj/MatMul ...

2024-06-08 14:05:37,300 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.27/attn/o_proj/MatMul ...

2024-06-08 14:05:37,371 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.27/attn/o_proj/MatMul ...

2024-06-08 14:05:37,375 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.27/mlp/gate_proj/MatMul ...

2024-06-08 14:05:37,570 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.27/mlp/gate_proj/MatMul ...

2024-06-08 14:05:37,580 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.27/mlp/up_proj/MatMul ...

2024-06-08 14:05:37,774 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.27/mlp/up_proj/MatMul ...

2024-06-08 14:05:37,783 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.27/mlp/down_proj/MatMul ...

2024-06-08 14:05:37,997 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.27/mlp/down_proj/MatMul ...

2024-06-08 14:05:38,006 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.28/attn/qkv_proj/MatMul ...

2024-06-08 14:05:38,091 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.28/attn/qkv_proj/MatMul ...

2024-06-08 14:05:38,095 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.28/attn/o_proj/MatMul ...

2024-06-08 14:05:38,158 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.28/attn/o_proj/MatMul ...

2024-06-08 14:05:38,161 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.28/mlp/gate_proj/MatMul ...

2024-06-08 14:05:38,378 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.28/mlp/gate_proj/MatMul ...

2024-06-08 14:05:38,387 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.28/mlp/up_proj/MatMul ...

2024-06-08 14:05:38,578 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.28/mlp/up_proj/MatMul ...

2024-06-08 14:05:38,588 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.28/mlp/down_proj/MatMul ...

2024-06-08 14:05:38,773 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.28/mlp/down_proj/MatMul ...

2024-06-08 14:05:38,783 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.29/attn/qkv_proj/MatMul ...

2024-06-08 14:05:38,865 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.29/attn/qkv_proj/MatMul ...

2024-06-08 14:05:38,869 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.29/attn/o_proj/MatMul ...

2024-06-08 14:05:38,930 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.29/attn/o_proj/MatMul ...

2024-06-08 14:05:38,933 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.29/mlp/gate_proj/MatMul ...

2024-06-08 14:05:39,119 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.29/mlp/gate_proj/MatMul ...

2024-06-08 14:05:39,129 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.29/mlp/up_proj/MatMul ...

2024-06-08 14:05:39,315 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.29/mlp/up_proj/MatMul ...

2024-06-08 14:05:39,324 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.29/mlp/down_proj/MatMul ...

2024-06-08 14:05:39,527 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.29/mlp/down_proj/MatMul ...

2024-06-08 14:05:39,537 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.30/attn/qkv_proj/MatMul ...

2024-06-08 14:05:39,620 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.30/attn/qkv_proj/MatMul ...

2024-06-08 14:05:39,625 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.30/attn/o_proj/MatMul ...

2024-06-08 14:05:39,693 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.30/attn/o_proj/MatMul ...

2024-06-08 14:05:39,697 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.30/mlp/gate_proj/MatMul ...

2024-06-08 14:05:39,928 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.30/mlp/gate_proj/MatMul ...

2024-06-08 14:05:39,937 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.30/mlp/up_proj/MatMul ...

2024-06-08 14:05:40,132 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.30/mlp/up_proj/MatMul ...

2024-06-08 14:05:40,142 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.30/mlp/down_proj/MatMul ...

2024-06-08 14:05:40,356 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.30/mlp/down_proj/MatMul ...

2024-06-08 14:05:40,365 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.31/attn/qkv_proj/MatMul ...

2024-06-08 14:05:40,446 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.31/attn/qkv_proj/MatMul ...

2024-06-08 14:05:40,451 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.31/attn/o_proj/MatMul ...

2024-06-08 14:05:40,515 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.31/attn/o_proj/MatMul ...

2024-06-08 14:05:40,518 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.31/mlp/gate_proj/MatMul ...

2024-06-08 14:05:40,712 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.31/mlp/gate_proj/MatMul ...

2024-06-08 14:05:40,723 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.31/mlp/up_proj/MatMul ...

2024-06-08 14:05:40,903 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.31/mlp/up_proj/MatMul ...

2024-06-08 14:05:40,913 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /model/layers.31/mlp/down_proj/MatMul ...

2024-06-08 14:05:41,104 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /model/layers.31/mlp/down_proj/MatMul ...

2024-06-08 14:05:41,113 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - start to quantize /lm_head/MatMul ...

2024-06-08 14:05:43,225 onnxruntime.quantization.matmul_4bits_quantizer [INFO] - complete quantization of /lm_head/MatMul ...

C:\Python312\Lib\site-packages\transformers\generation\configuration_utils.py:848: FutureWarning: The `use_auth_token` argument is deprecated and will be removed in v5 of Transformers. Please use `token` instead.

warnings.warn(

Saving GenAI config in Meta-Llama-3-8B-Instruct-onnx-cuda-int4

C:\Python312\Lib\site-packages\transformers\models\auto\tokenization_auto.py:769: FutureWarning: The `use_auth_token` argument is deprecated and will be removed in v5 of Transformers. Please use `token` instead.

warnings.warn(

tokenizer_config.json: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 51.0k/51.0k [00:00<00:00, 51.0MB/s]

tokenizer.json: 100%|██████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 9.09M/9.09M [00:00<00:00, 18.4MB/s]

special_tokens_map.json: 100%|███████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████████| 73.0/73.0 [00:00<?, ?B/s]

Special tokens have been added in the vocabulary, make sure the associated word embeddings are fine-tuned or trained.

Saving processing files in Meta-Llama-3-8B-Instruct-onnx-cuda-int4 for GenAI